OpenSauce 2024

We created a fun robot project for OpenSauce 2024!

How Did We Get Here?

We attended OpenSauce in 2023 and thought there were a bunch of really neat inventions. When applications opened in February 2024, we knew we had to make something to exhibit. One TinyTech team member had this old robot from his childhood that no longer worked, so we wanted to see if we could rebuild it to be functional again.

As fate would have it, someone on ebay was also selling 16 of these little guys for $1000. For some reason nobody was buying it, so the seller dropped the price to $600, then $300. At this point we were losing money not buying it and made the purchase. Now we have over a dozen robots (without batteries) and we needed to figure out what to do with them...

Original Robot

eBay Listing

Breathing New Life Into Old Bots

We had big plans: maybe these robots could play sports? Or fight? Or maybe even dance? Unfortunately these little bots were limited to the canned animations they were pre-programmed with. This allowed them to walk, talk, and do little punch/kick animations that were more for show than anything. To make matters worse, only two robots could be used at a time because they have only two infrared (IR) channels to connect to. Team sports were off the table...

And that was when the robots were new! By the time we received them, most had board issues, broken servos, cracked plastic, and a variety of other... ailments. We got pretty good at taking the working parts from multiple broken bots and re-assembling working ones, but there was no fix for the limited functionality.

We searched online and found little in the way of hacking this robot. We found this one that automated the IR signals from a microcontroller, but we wanted direct motor access. Everything changed when we stumbled across the conveniently named i-sobothacking.blogspot.com, which broke down the main components of the control board and motor control protocols. It turns out each limb is on its own serial port running at a baudrate of 2400bps (except for the head, which is tacked on the right arm port). An initialization byte 0x05 is sent, followed by joint angles sent as a sequence of bytes starting at the torso and extending down the limb. A checksum is calculated as the seventh byte, and the packet is closed with a 0xFF byte.

Now that we know it is possible to directly inject data into the servos, we needed to build some hardware to test it. First we tried etching our own PCB from some flexible PCB material we had to connect to the UART output of a Raspberry Pi zero (a digital logic AND gate was used to use digital outputs to switch between the limbs). This technically worked, but the serial channel had to be switched between the four ports causing stuttery motion. We then switched to a Teensy 4.0 microcontroller for its multiple serial channels and soldered everything in the body by hand. At this stage we included a speaker driver, IMU, and a data connector to attach to a Raspberry Pi later. This worked great but there was no way this could be scaled up to more than one robot.

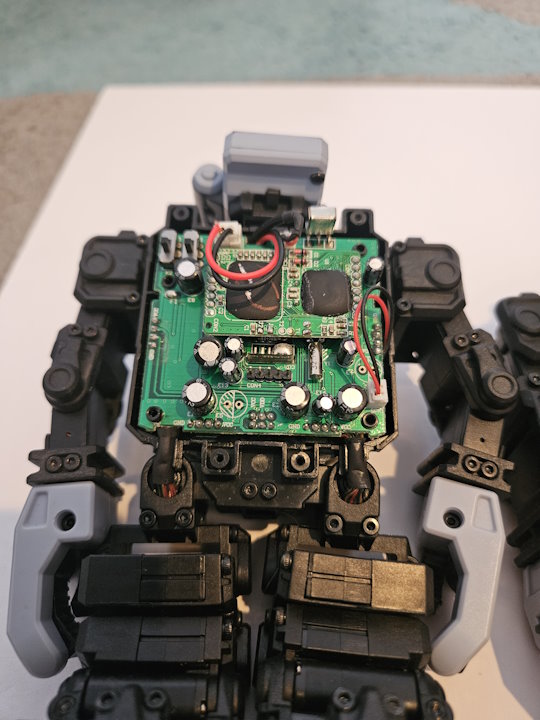

Original Board

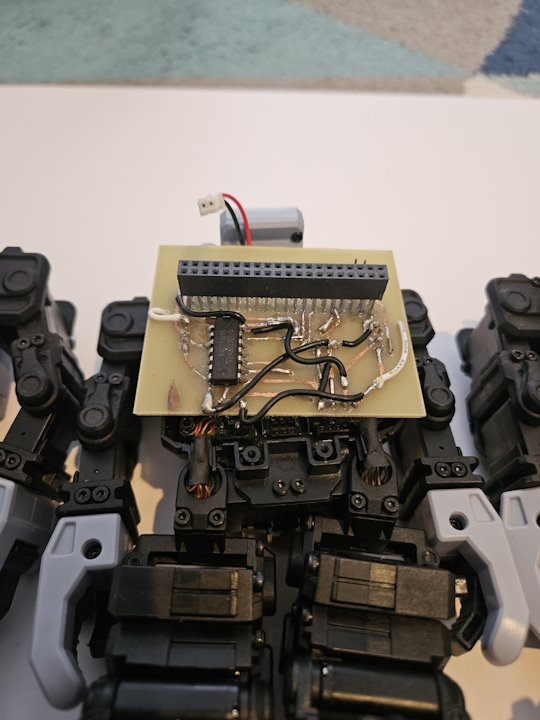

Raspberry Pi Board (v0)

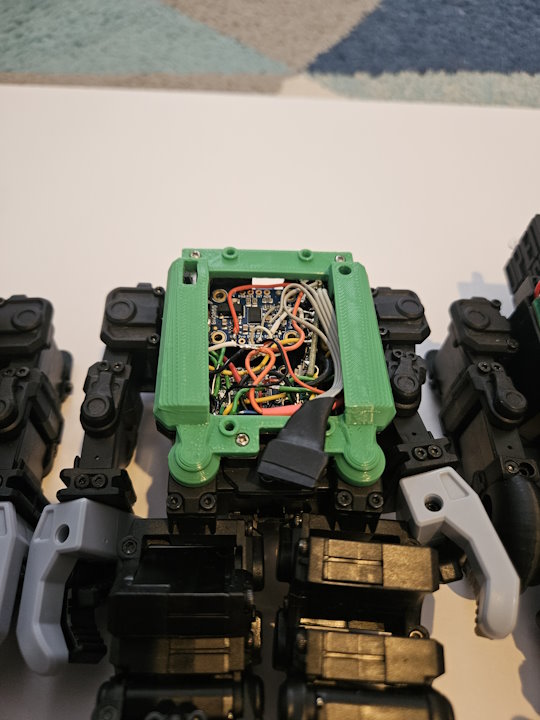

Hand-Soldered Board (v1)

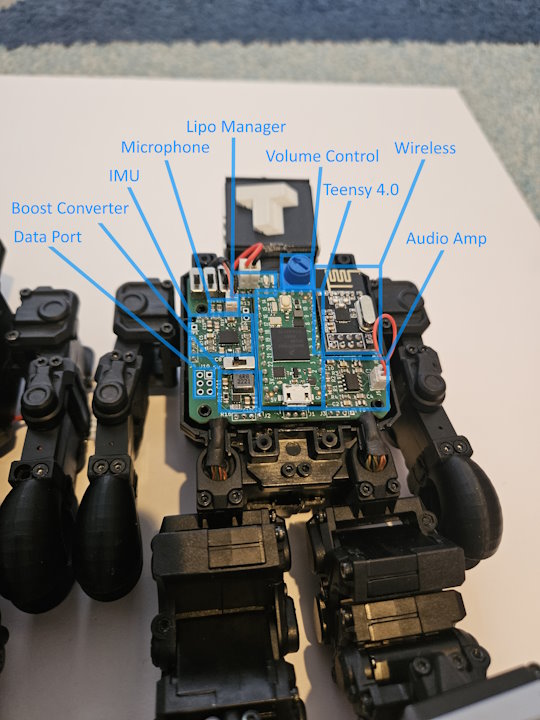

Around this point we realized we needed a custom PCB. We used what we learned with the previous two designs to make the following list of features:

- High-speed microcontroller with at least 5 UART ports

- Audio amplifier to use the robot's included speaker (with volume control)

- LiPo management for safe discharging and charging of 1S battery

- Boost converter to run 5V electronics from 3.7V LiPo

- IMU for absolute orientation measurement

- Short range, high-speed wireless module

- A data port to connect directly to an external microcomputer

- Microphone input to enable voice control

We selected the Teensy 4.0 microcontroller, PAM8302AAD audio driver, MPC73831 LiPo charger, TPS610997DRV boost converter, BNO055 IMU, nRF24L01 wireless module. It took a couple tries to get the board right, but we ended up with a fully-featured PCB that had all of the features we wanted. We hand-soldered each board, and after a couple days we had 10 fully populated boards! The IMU was the hardest part to solder down, and of the 10 boards, 9 had functional IMUs. The tenth board was marked as the transmitter and set aside.

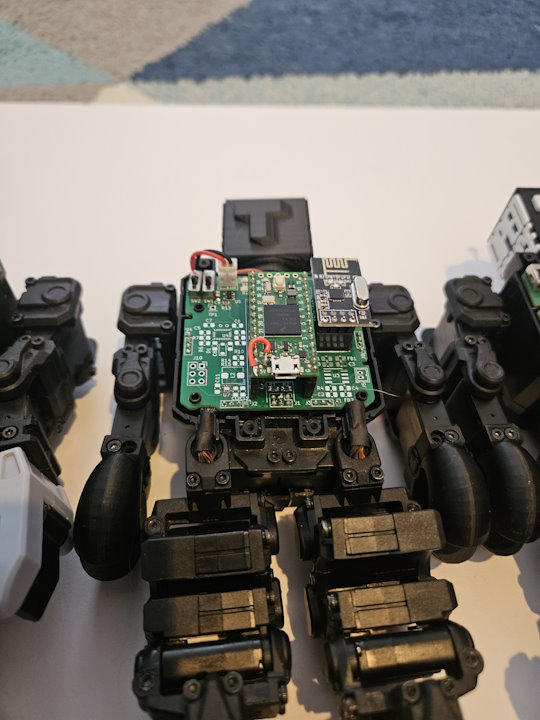

First PCB Attempt (v2)

Second PCB Attempt (v3)

Pile of Boards

Developing Soft Skills

We now have a bunch of robots that can do cool things in theory. Right now they're able to hold the pose they're in when turned on (exciting!) When we ripped out their original brains, we also removed all of the animations, audio clips, and basically anything needed to make them interesting. We were starting from scratch, and it was going to be a lot of work to get them running (or at least walking).

We whipped up a quick Arduino script to move all of the servos to their "zero" positions, which made it T-Pose like in most videogames. We were able to hard-code in some new positions, but getting a complex animation (like a walking sequence) was nearly impossible. It would be a lot easier to animate the robot like a videogame character and somehow export that onto the robot...

Anyways it turns out it is pretty easy to write a Blender plugin to convert the local joint rotations to signed integers that can be bundled in packets 17 bytes long to write joint angles to the robot over a serial connection. The Blender project uses a model that is relatively accurate, and joint constraints are in place to prevent the joints from moving in impossible ways. We were able to get a data rate of around 50 animation frames per second for the smoothest motion. We developed a simple animation export format where each frame of 17 joint angles would be written to a .h file that could be read by the Arduino code to play the sequences when desired.

Trouble in Paradise

We submitted a pretty lame application to exhibit at OpenSauce 2024 early into March. We had a proof-of-concept PCB together that we started developing software for, but we were a brand new team with no socials, no YouTube videos, just promises that we could somehow get all these robots ready to play sports, fight, and dance before the event in June. We actually paused the project for about a month after not hearing if we were accepted to exhibit. In April we reached out to one of the event corrdinators and learned we were reviewed and placed into the "more info needed" pile. We had tall promises but no proof that anything actually worked.

Oops.

The event coordinator was super nice and transparent, and we were far enough into the project to be confident we could prove that we could deliver. We cleared our schedule to prioritize our first YouTube video and started planning what we would talk about. In the interim, we sent the following video of the first prototype robot walking by being controlled directly by our animation software.

The next day we got the following discord DM from the event coordinator we were talking to:

Bingo

The William Osman (the guy who founded OpenSauce) saw our early development video and decided to accept our entry! Now we just needed to complete the rest of the project in two months.

Let the Games Begin

Hardware? Check. Software? Check? An interesting series of fast-paced robot games for people to play? Not quite. We wanted "sports, fighting, and dancing", but what does that actually mean? We found the robots are small enough that they can kick around a ping-pong ball like they're playing soccer. That pretty much just involves walking, and seemed doable. Fighting is just walking into position and using the robots' arms to punch other robots. And dancing can just be the robots moving into random poses in a somewhat coordinated fashion. Shouldn't be too hard.

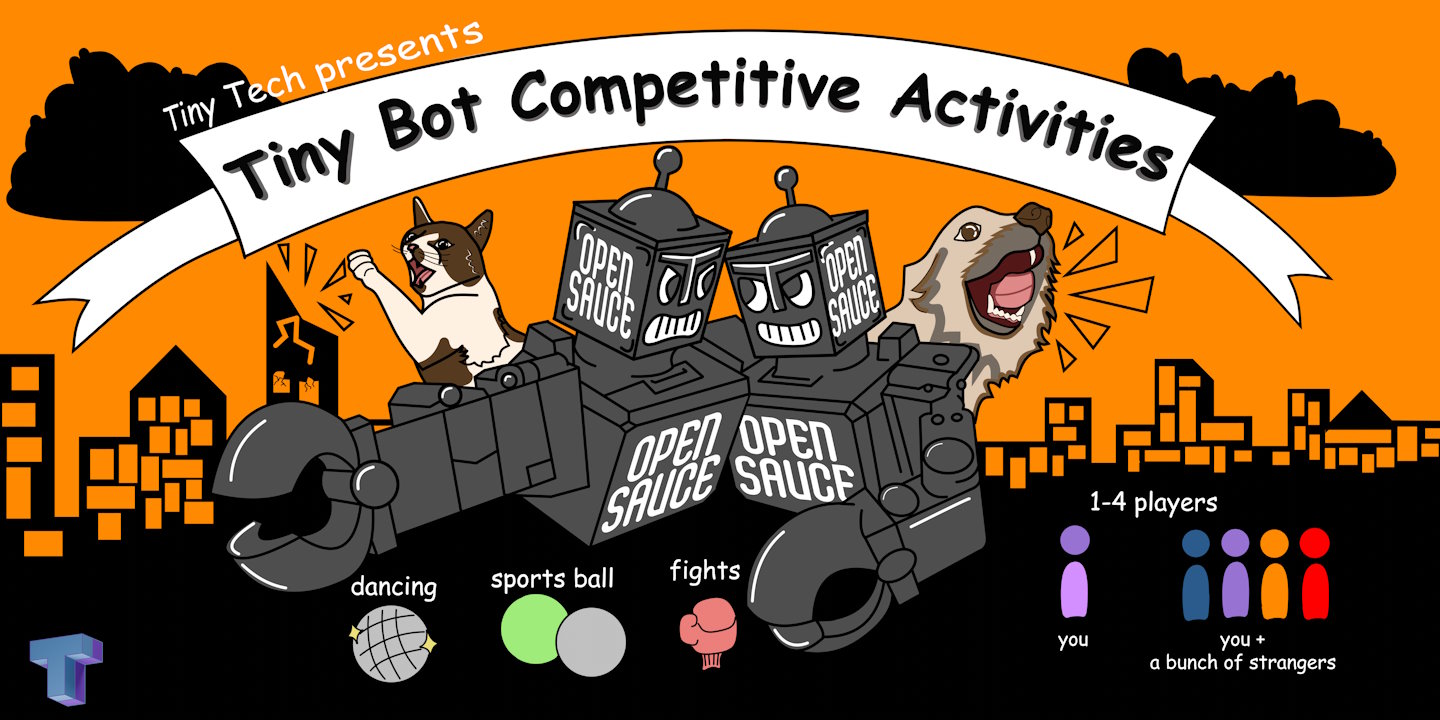

With the game selection and project scope nailed down, we could finally make a poster fitting of OpenSauce (the cat and dog are our pets Gizmo and Sassy, respectively):

OpenSauce Banner

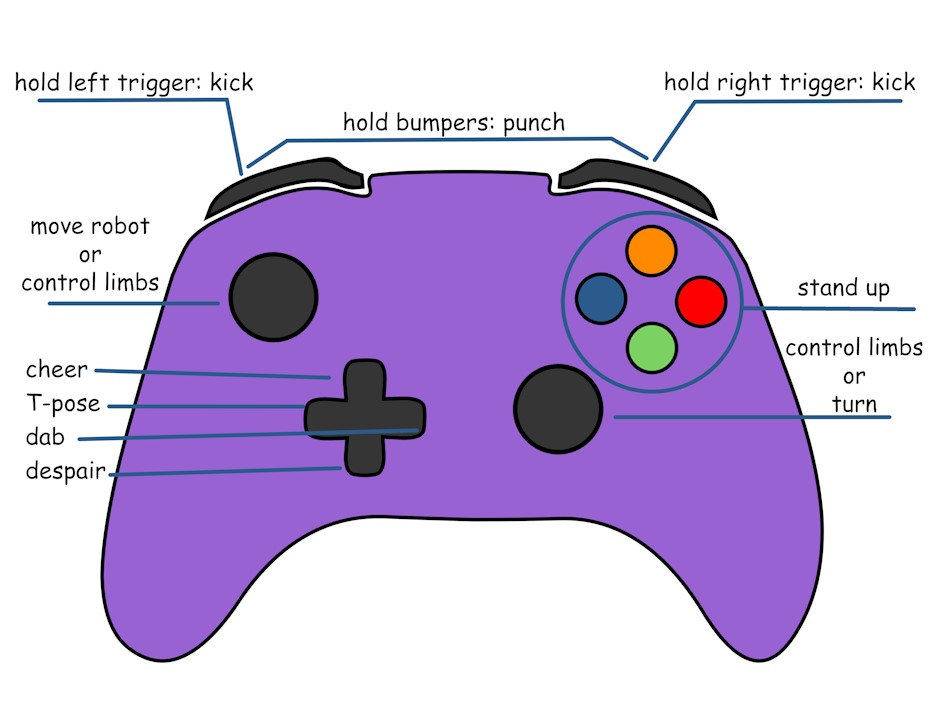

To control the robots we used knockoff wired Xbox 360 controllers. These were great because they had analog inputs like the real things, but were only around $20 apiece. These inputs could be interpreted by a python program and sent directly to the robots to perform actions like walk forwards, backwards, strafe side-to-side, turn, stand up when fallen over, or even play taunts. We even worked in button combos to kick and punch using the bumpers and triggers!

Controller Layout

We discovered pretty early on that pre-made animations for moving were not very fun to use. Even if we interrupted the animation every step, it was hard to maintain momentum and the controls felt sluggish. Plus the "punch" and "kick" actions had trouble making contact and the robots fell over frequently when doing most actions.

To fix this, we integrated a "dynamic" walking algorithm that ran an internal clock to lean the robot side-to-side using a sine wave and then added or subtracted the other leg joint angles on sine or cosine functions depending on the amplitude of the controller joysticks. This meant we could also mix the inputs from the multiple axes to walk diagonally or turn while walking forwards or backwards.

Crunch Time

We had about a month before the big event. Our team spans both the US and Scotland, which means between work and life we only had a few hours each weekend to actively work together. Our lead software developer didn't even have access to the robots to actually test his game code on, so a robot was mailed to him overseas. Being the impatient bunch we are, he developed a webserver to transmit his controller inputs to robots connected to a host transmitter on another computer.

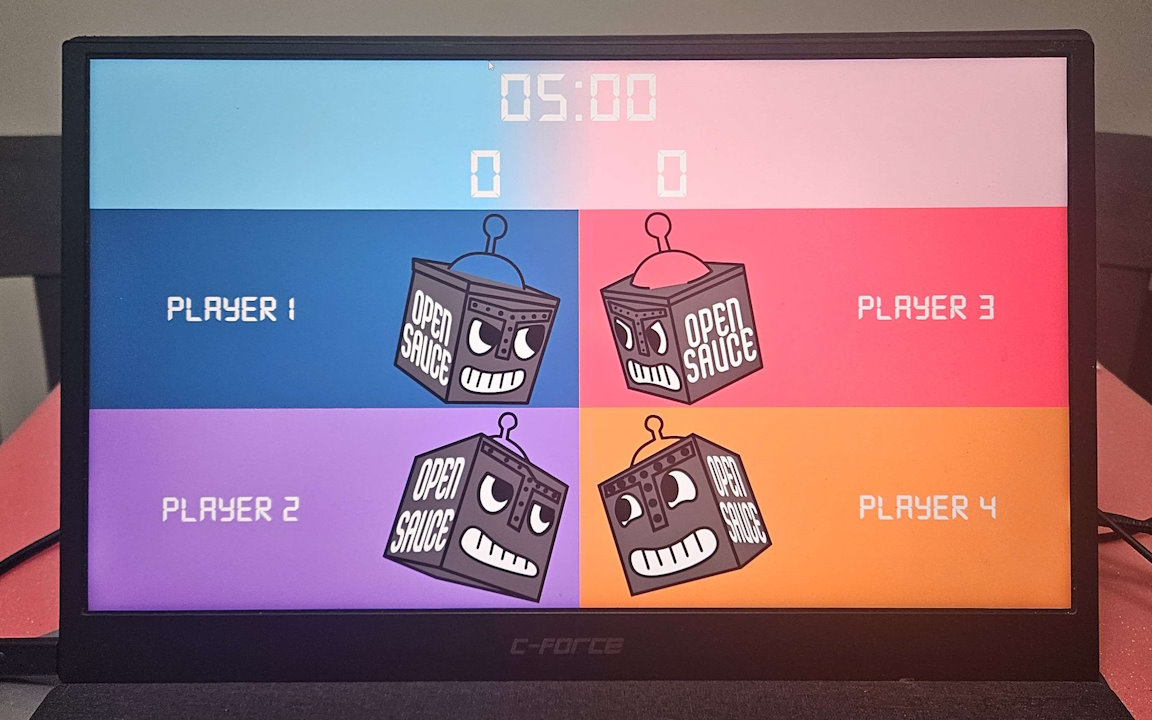

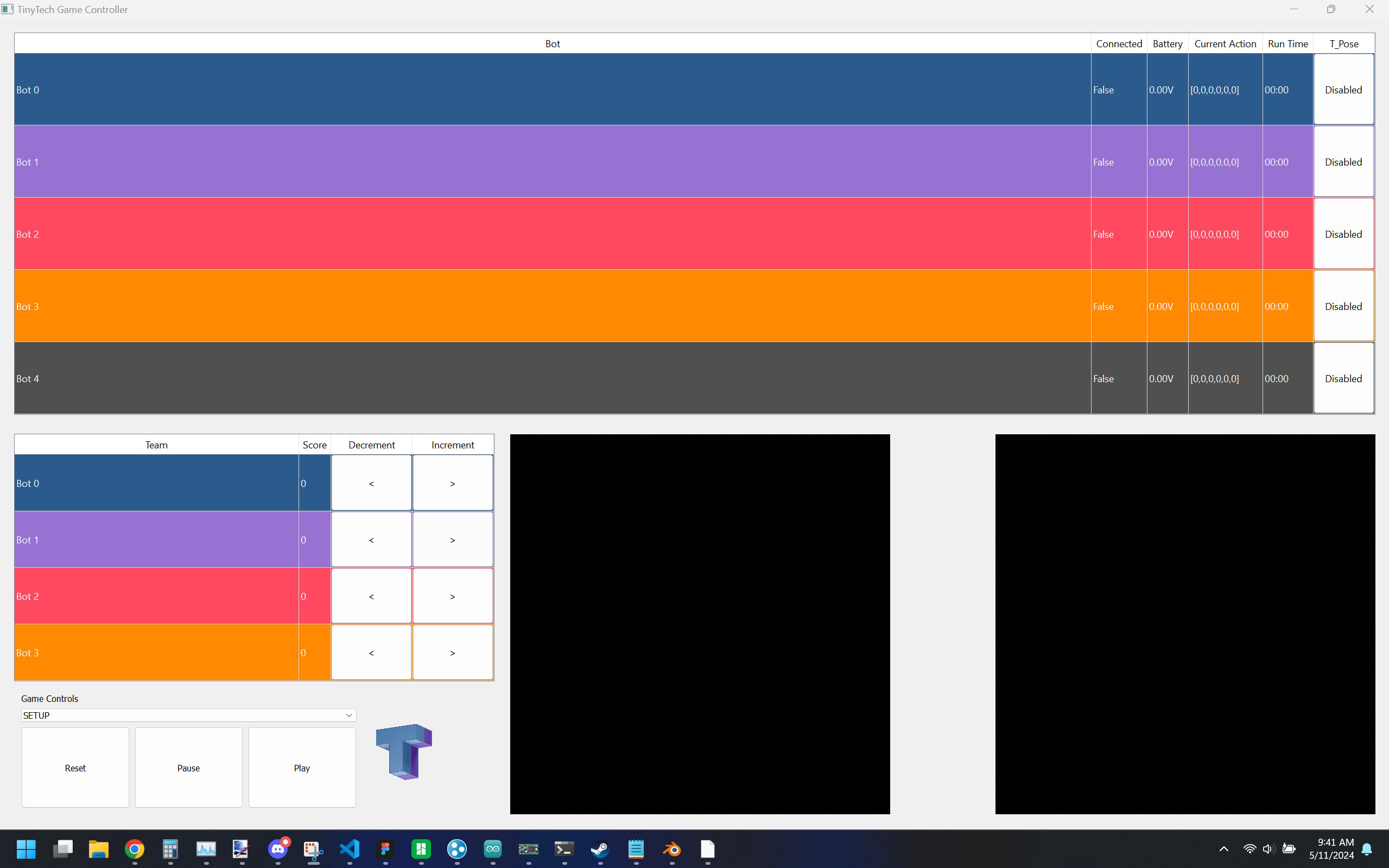

This was pretty neat functionality, but it quickly dawned on us what this meant: we could now run full playtests with anyone on the internet. After multiple iterations we developed two interfaces: a scoreboard for the player to see and a development interface for us to run the games with.

Scoreboard

Game Console

At this point nearly all of the robots were done. Each was modifed to repair their servos and replace their speakers, hands, front plates, back plates, and heads. Some soldering was needed to update the wiring of the the LEDs in the head, speaker wires, and battery connector.

It should be noted that we really only needed a max of four robots for the games. Unfortunately our test robot broke on multiple occasions from minor use, and we were terrified we'd have multiple robots die throughout the three-day event. That's why we ended up bringing a total of seven player robots (more on that later) so they could be swapped out and repaired throughout the weekend.

The Robot Army (Front)

The Robot Army (Back)

We turned our attention to the area the robots will operate in. We wanted large, flat, textured surface for the bots to walk on. We tried a few materials but settled on a sheet of Polycarbonate with a matte vinyl added for extra grip. Other decals were designed and cut on our Cricut to add the team's logo and other various lines. These were all stuck to the underside to maintain the smooth top surface. The size of the sheet was dictated by the size of our Pelican case. Short colored walls were 3D printed so the ping-pong ball wouldn't fall out of the space and goals were designed with infrared sensors to detect when the ball passes into it.

Patiently waiting for the game to begin...

All of the robots on the field were receiving data from one of the leftover PCBs, which was configured to act as a transmitter. We wanted to make the transmitter more exciting than just a boring box, so we splurged on a special Japanese edition of the robot with a white plastic shell. This robot's electronics were further modified to add in a large antenna, power it off a wired power supply, and ports to connect the two IR sensors of the goals. It even played its own little animations when a goal was scored!

Accidental Killer App

We had about a week to go, the soccer and fighting games were working well and quite possibly fun? We ran a couple playtests through our discord server and had the games just about balanced out, but one element still alluded us. What would we do for the dance mode? We implemented random motions that would be transmitted to all robots at once for a coordinated dance mode, but that wasn't interactive. Sure it kept the robots moving to get interest to our table, but we wanted more.

Since everything was basically finished, we started experimenting with Google's MediaPipe software for motion tracking. We thought there could be a game in matching the robot's pose as close as possible (with the added benefit of making attendees look insane). This turned out to be pretty easy to implement, we took the positions of the player's hands and compared it to their shoulders. These coordinates in image space were then worked into a basic IK model for the robot's arms and we could directly compare the robot's motion to the player's. This had an accidental side-effect; the player's motions could just be passed along into the robot using the exact same process.

We accidentally created a mimic mode where an army of little robots could match your arm, head, and hip movements in near real-time. This was a ton of fun to play with so we quickly added it as the default mode if a dance sequence was not running. Not too bad for a last-minute addition!

OpenSauce 2024

We're in the endgame now. Playtests were wrapped up and we were ready to pack everything into a single Pelican case for our flight to San Francisco. While we waited for our 24 LiPo batteries to be discharged to a "safe" storage voltage, we cut the bright pink antistatic foam we had to size. In anticipation of the robots falling like flies, we had a glue gun, soldering iron, screwdriver kit, and an array of pliers and other tools. We even brought a level so we could make sure the play area was fair!

Packed and ready to go!

We put a luggage tracker and TSA-approved locks on for good luck and we were off to the event! The trip was uneventful other than us waking up a 2am to drive to the airport two hours away. We all reached the Cow Palace Event Center around 8:30am on Friday and had no issues getting down to our table. After about two hours of reassembly, table leveling (shoutout to the robotics club next to us who had extra 3D printed "shims" we could use) and battery charging, we got the table up and running:

Success! None of the robots or other exhibit items were damaged in transit and everything just worked. The first day was pretty slow since Cow Palacy was filled exclusively by other exhibitors, some VIP/Industry badge holders, and some of the popular creators The Hacksmith dropped by for a few minutes and he was very friendly! We split up so there'd be two people at the table and two people roaming around the event center, and holy mackerel there was a bunch of cool stuff to see! Unfortunately the Friday night party was not held in the space our exhibit was in, but it was fun nonetheless. We got back to the hotel late and exhausted ready to get up bright and early for the main event!

Neat Diorama

Cursed Furby Robot

Giant Gameboy Camera

Back at our table, things were going well. We had a brief snag where all of the robots needed a code update and we didn't have a spare USB micro cable to do it. Fortunately, we met TheLastMillenial who was running the ti-84 calculator hacking booth had a spare we could borrow. We started the event with the minimum robots needed (3 for dance mode and 4 four fighting/soccer) but after the first day we realized the robots were a lot more robust than we anticipated. We did some napkin math and realized we had enough batteries to continuously run up to 6 robots at once in the dance mode (still four for the other modes because of controller limitations). We were interviewed by three groups including CodeMiko, which we attached in a Twitch recording below.

By the end of the first day a couple of us lost our voices from talking to so many people (probably a couple thousand?) We could sit down for maybe 10-30 seconds at a time before someone stopped by to chat. Some people stopped to chat about what exactly our project was, some people only wanted to dance in front of them, some people (mostly kids) wanted to drive them with the controllers, and a few people asked what our monetization strategy was. People were routinely suprised to hear that we made it just for the event and the bots were not for sale.

None of the bots died throughout the whole event (even after one toppled off the table), nothing was stolen, and overall everything went perfectly. We had no idea the dance mode would be so fun (plus a great "hook" since we could ask passers-by to stand on a spot marked on the floor) but it makes us wonder how we could improve it for next time. We think adding more motion capabilities would be fun (legs, body pitch, etc.) but the main request was to add the motion tracking to the fight mode (Real Steel style). We have big plans for next year, lets hope we make it!

Follow Us!